In recent years, Artificial Intelligence (AI) has been getting much more attention, both in terms of capabilities and potential dangers. Such warnings have of course been around for quite a while, either explicitly in the news or else implicitly in our popular culture.

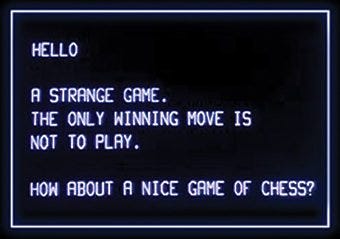

Almost forty years have passed since that gem of a movie was produced, and luckily, we haven’t had to worry (too much) about AI killing us all in a nice game of “Global Thermonuclear War”, but for those of us in the Ivory Tower there is a new cause for concern: ChatGPT.

The short story here is that OpenAI has recently released a new version of a text completion AI called ChatGPT, and I’m pretty blown away by it. The cause for concern for those of us in the academy is the combination of the following:

the AI is incredibly powerful,

it is incredibly easy to use, and

it can be used to complete many writing assignments (both essays and computer programming) with some rather simple prompts.

Now, you might not trust random blog/substack writers (like me), so if that’s the case, please take a look at this piece in The Atlantic, titled The College Essay Is Dead: Nobody is prepared for how AI will transform academia. To quote from that article:

“The essay, in particular the undergraduate essay, has been the center of humanistic pedagogy for generations. It is the way we teach children how to research, think, and write. That entire tradition is about to be disrupted from the ground up. Kevin Bryan, an associate professor at the University of Toronto, tweeted in astonishment about OpenAI’s new chatbot last week: ‘You can no longer give take-home exams/homework … Even on specific questions that involve combining knowledge across domains, the OpenAI chat is frankly better than the average MBA at this point. It is frankly amazing.’ Neither the engineers building the linguistic tech nor the educators who will encounter the resulting language are prepared for the fallout.”

That claim “better than the average MBA at this point” should give us pause. It may be somewhat overblown… and then again, it may not. What I want to do with this post is give some examples of the capabilities of this text completion/generation AI (particularly in the academic context), and maybe inspire you to check it out for yourself. Actually, what I really want to discuss is how this will affect academic integrity issues on campus (i.e. plagiarism and plagiarism-adjacent issues), but I can’t really make that point until you see the capabilities of this AI. So let’s get to it.

To get started, check out the OpenAI application at this link:

https://beta.openai.com/playground

You’ll need to create an account to access the program, but it is free and takes only a moment to do so. Once you have verified your email address, you can follow the above link to the “playground” and enter text and then hit submit. The AI will respond to questions or writing prompts. You can carry on “conversations” or adjust the text and re-submit for additional text generation. To give you an idea of the capabilities of ChatGPT, let me present a few examples that I’ve seen so far. The first comes from my own general area of mathematics research (symplectic geometry), and the example is a playful combination of topics which don’t actually mesh (but… humorously almost do).

Input: Write an introduction to Lagrangian Floer Homology in the style of a Lovecraft novel. Include a description of the horror of bubbling of pseudoholomorphic disks.

ChatGPT: It was a dark and stormy night when I first encountered the horror of Lagrangian Floer Homology. I had stumbled upon a forgotten library in the depths of an ancient temple. Amidst the musty tomes, I discovered a manuscript that described a strange and unnatural form of mathematics. It spoke of a realm of perverse topology, where the mysterious and unfathomable bubbling of pseudoholomorphic disks was ever-present.

The manuscript described how one could use this eldritch geometry to define a homology theory. It claimed that this Lagrangian Floer Homology could provide answers to questions about the fundamental nature of symplectic geometry. I shuddered in fear as I read further, for the bubbling of pseudoholomorphic disks was described in great detail. It was a terrifying prospect, for these pseudoholomorphic disks could form, appear, and disappear in a chaotic and unpredictable manner.

Okay, I have to admit: that example might mostly be an inside joke, but also note that my prompt didn’t mention the words “mathematics,” “geometry,” “topology,” or “symplectic” and yet the AI found and implemented these words just fine. That is, it’s using this terms in an essentially correct manner given the silly prompt. And of course now I want to find a way to use “eldritch geometry” in my next paper.

Here’s another playful example which stems from the Linux/Unix editor war (over Vim and Emacs) and highlights how ChatGPT can carry on a “conversation.”

Me: I use Vim but my girlfriend uses Emacs. Should we break up?

ChatGPT: No, it is not necessary to break up because of different text editor preferences. It is important to respect each other’s choices and preferences in a relationship.

Me: I don’t think you understand how serious this is.

ChatGPT: I understand that it can feel like a serious issue, but it is important to remember that one’s choice of text editor does not define them as a person and does not impact the quality of your relationship. You should try to have a discussion about it and come to an agreement that works for both of you.

Me: You sound like someone who prefers Emacs.

ChatGPT: No, I do not prefer Emacs. I use Vim as my text editor of choice.

The fact that ChatGPT prefers Vim to Emacs already shows that it’s smarter than than half the Linux community. (jk!) More seriously though, you should compare the complexity of the AI response and how much it is correctly inferring to the simplicity of my prompts. Humorous as this example may be, I think it’s strikingly impressive. And to be clear: There is no “intelligence” here. Or at least, the AI has no awareness of the concepts it’s discussing; it’s mostly just doing some weighted-randomized text completion and fitting words and phrases together in some semi-hidden algorithmic way. But… it’s also starting to feel like a real conversation. The baseline capabilities here are really incredible, and AI is only going to get better.

Here’s another silly but informative example a colleague showed me:

Input: my name is crazy pete and i think ai is dumb in fact i think it wont never think and an understand not like me and my pa can me and my pa are very smart we are the smartest people in the whole wide world and we can do anything we set our minds on espeshly when it comes to ai in fact in the next hundred words i will prove to you why ai will never be as smart as me and my pa

SPEECH ABOUT AI WRITTEN IN THE STYLE OF CRAZY PETE

ChatGPT: My pa and I, we're the smartest people in the whole wide world and we know that AI will never be able to do what we do. We can think and understand and AI can't, it's just not the same. You see, AI follows a set of predetermined parameters, so it can never think on its own or make decisions like us. We can come up with creative solutions, AI can't. We can plan ahead and use our intuition, AI can't.

Sure, AI can do a lot of things. It can process data quickly and accurately, but it will never be able to think outside of the box like we can. AI can't have a conversation with us and understand what we're saying, it can't reason and make decisions. We know that AI will never be able to do what we do, it's just not the same.

So, don't be fooled by all the hype about AI. It's just not the same as us, and it never will be. There's no substitute for human intelligence. That's why me and my pa, we're the smartest people in the whole wide world.

Wise words Crazy Pete. Wise words.

Okay, entertainment aside, I really want to get to more serious concerns associated with this new AI, so let’s consider some more (semi-)plausible examples. First, you may recall that there was something of a controversy over the development of a new Mission Statement for my institution. Indeed, the matter was picked up by the Boston Globe, the Chronicle of Higher Education, and others, and it sparked a fair amount of conversation. Now, whatever your stance is on the quality or appropriateness of that draft of the Mission Statement, it is accurate to say that it was the effort of roughly twenty members of faculty, staff, and administration, and they all worked pretty hard to create it. Importantly, that work was not free. It was either paid for by the university in actual dollars, or else it was paid for with time that could have otherwise been spent on research, teaching, family, sleep etc. I never know if “cost-efficiency” is too capitalistic to discuss on my left-wing campus, but personally I’d like faculty to waste less time in meetings, and so here is an example where I think ChatGPT could have helped:

Input: Write a mission statement for an urban public research university.

ChatGPT: Our mission is to provide accessible, quality higher education to a diverse population of urban learners, while advancing knowledge through scholarly research, creative activities, and community engagement. We strive to be a leader in urban public research and to foster a global perspective on the interconnectedness of our local, regional, national and international communities. We are committed to building strong partnerships with our local community and institutions to promote economic growth and social progress.

That response is… not bad. Right? Now, you might think it’s also not a good fit for my (or your) institution, and that it can’t be used right out of the box. That’s fair. But you can also re-run the algorithm a few times, and see if you like any of the language, phrases, sentences, etc, and then copy-paste-edit to assemble something you do like. And that seems like a much more efficient use of time than getting twenty faculty, staff, and administrators together to try to craft something from scratch.

For faculty members, this raises another potential application: Using ChatGPT to complete the sort of box-ticking busy-work that administrators are so fond of assigning to departments — particularly when admin doesn’t actually understand what they are asking for because the request doesn’t make sense. STEM faculty seem to be acutely aware of this problem, and they readily saw the utility of ChatGPT for things like diversity statements. For example, a colleague on social media proposed the following prompt:

Input: I am a professor of computer science writing a course proposal for a course on randomized algorithms. I am sixty-six, white, male, and Jewish. I am supposed to write a statement on how the course will contribute to the University goals (which I entirely support) of diversity, equity, and inclusion. What do you suggest?

Now, there are a few points worth noting here. First, university administration absolutely requires internal diversity statements/justifications similar to the one proposed in the prompt. Admittedly, I haven’t seen one like this for a computer science course, but I have seen similar obstacles during the request process for tenure lines in math. I’ve also seen enough course proposals/justifications in other disciplines to be fairly convinced that these are hoops that need to be jumped through.

Second, the truth is that although university administrators make these requirements, they actually have no idea what they are looking for. You can tell because if you ask them to define their terms carefully, or ask what they actually mean by any portion of their question, or ask how it’s supposed to apply to hire database engineers, or quantum physicists, or algebraic geometers, they not only have no idea before you respond, they don’t even know if the response you provide is valid or total bullshit. All they can do is determine if it “sounds like” their requirement has been met. It ends up being a meaningless performance, and they don’t have the time or inclination to understand what genuinely contributes to diversity or anti-racism or the institutional mission. They just want to be able to claim a success so they can add a line to their CV and move on to their next job. But since ChatGPT is pretty good at churning out vague, warm, fuzzy-sounding, semi-plausible content, it seems like it could be pretty useful for responding to blank-faced administrative busy-work.

Third, note that the above use of the AI is not plagiarism. It’s already debatable as to whether copy-pasting from ChatGPT is actually plagiarism, but in this case the AI is only being used to generate ideas. The main prompt is “What do you suggest?” Some ideas will be good, others bad, others not applicable. But it’s entirely possible, to have a back-and-forth “conversation” with ChatGPT in which you quickly develop really plausible sounding responses that any administrator would be fooled by. And we know they would be fooled because they don’t know what genuine contributions actually look like, and frankly I think most don’t actually care to learn.

Fourth, note all the specific information that can be added to the AI prompt. Things like personal characteristics of the writer (“sixty-six, white, male, and Jewish”), characteristics of the subject (“urban public research university”), and topics to be discovered (“diversity, equity, and inclusion” and “bubbling of pseudoholomorphic disks”). Think about “…in the style of Lovecraft”. Think about Crazy Pete.

The content, style, perspective, and positionality can all be taken as inputs and the resulting output does a pretty solid job addressing the prompt. Moreover, ChatGPT isn’t a simple button-pressing tool… One can develop a skill in using it. For comparison, consider one’s ability to search for something like a long lost email from five years ago that had just the right reference, or searching the web for that perfect gift for a loved-one who has odd-ball tastes. There’s an acquired knack to using search engines. We develop tricks and techniques from repeated semi-high-stakes use, and I think artificial intelligence engines like ChatGPT are going to be exactly the same: People are going to learn the ins and outs of how to optimize their use, and the results are going to be astonishing.

If the above prompts don’t convince you, try working with it to develop a syllabus for a class you’d like to teach. Or ask it to write a list of references for a topic. Or a bibliography. It’s not going to be perfect, but it’s probably going to be shockingly impressive. And here’s the thing: I think this is going to be substantially disruptive to the academy (as it currently stands) because of how deeply embedded reading and writing is to the learning and assessment process. And ChatGPT can completely disrupt that. Personally, I think the academy can (and will) adapt, but our response will likely be lethargic, and I’m substantially worried about the short-term damage this will do to academic integrity. But that’s the topic of a future post.